NVIDIA Admits G-Sync Glitch Resulting in Huge Power Spikes at High Refresh Rates

John Williamson / 8 years ago

NVIDIA’s G-SYNC is a propriety module embedded into select monitors which directly synchronizes game performance with the monitor’s refresh rate. This creates a smooth experience and minimizes the stutter you would typically get from V-Sync. This also eliminates screen tearing and some users argue it’s a more seamless experience than AMD’s FreeSync technology. Evidently panels with G-SYNC incur a hefty price premium which means consumers have high expectations.

NVIDIA’s G-SYNC is a propriety module embedded into select monitors which directly synchronizes game performance with the monitor’s refresh rate. This creates a smooth experience and minimizes the stutter you would typically get from V-Sync. This also eliminates screen tearing and some users argue it’s a more seamless experience than AMD’s FreeSync technology. Evidently panels with G-SYNC incur a hefty price premium which means consumers have high expectations.

Recently, a software bug emerged which results in significant increases in the GPU’s power draw under idle circumstances. Bizarrely, the clock speeds ramp up too, but only at a significant amount on monitors with a 144Hz+ refresh rate. This notion was discussed by Ryan Shrout from PCPer and said:

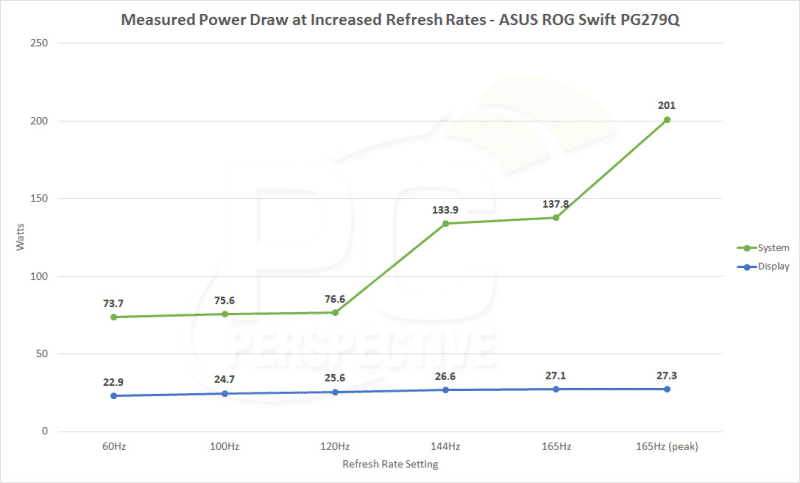

“But the jump to 144Hz is much more dramatic – idle system power jumps from 76 watts to almost 134 watts – an increase of 57 watts! Monitor power only increased by 1 watt at that transition though. At 165Hz we see another small increase, bringing the system power up to 137.8 watts.”

“When running the monitor at 60Hz, 100Hz and even 120Hz, the GPU clock speed sits comfortably at 135MHz. When we increase from 120Hz to 144Hz though, the GPU clock spikes to 885MHz and stays there, even at the Windows desktop. According to GPU-Z the GPU is running at approximately 30% of the maximum TDP.”

NVIDIA have acknowledged the strange power draw issue and is currently working on a fix to be included in a driver revision. The NVIDIA response reads:

“We checked into the observation you highlighted with the newest 165Hz G-SYNC monitors.

Guess what? You were right! That new monitor (or you) exposed a bug in the way our GPU was managing clocks for GSYNC and very high refresh rates.

As a result of your findings, we are fixing the bug which will lower the operating point of our GPUs back to the same power level for other displays.

We’ll have this fixed in an upcoming driver.”

I’d love to hear from people who own a G-SYNC display. Do you feel the module is worth the added cost when selecting a monitor?