PlayStation 4 & AMD Radeon R9 290X GPU Share The Same 8 ACE’s

Peter Donnell / 11 years ago

We’ve seen quite a lot of details about the PlayStation 4 GPU and the new Radeon R9 290X Hawaii GPU, but this latest set of slides show that both the Liverpool GPU that is inside of the Sony PlayStation 4 could also be part of the same Volcanic Islands family of GPU’s as the R9 290X.

R9 290X Hawaii GPU

Launch games for both Xbox One and PS4 look fantastic and while the PC Master Race may debate that PC gaming is better (in terms of graphics, certainly in some cases) there is no doubt that the new consoles are leaps and bounds ahead of their current gen counter parts and better is simply better. The question is, how much raw power really sits inside the PlayStation 4 GPU, because as we all know, release titles are absolutely no indication of the true power of the hardware, just look at PS3 launch titles compared to games released this year!

R9 290X Hawaii GPU

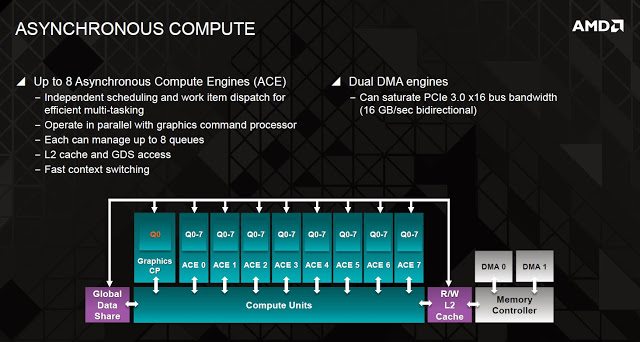

Slides of the R9 290X Hawaii GPU revealel that the Volcanic Islands GPU will also feature 8 Asynchronous Compute Engines. Each of the 8 ACE’s can manage up to 8 compute queues, at a total of 64 compute commands. Compare that to the HD 7970 which only has 2 ACE’s that can only queue 2 compute commands for a total of 4 & the Xbox One only has 2 ACE’s but they also manage up to 8 compute queues for a total of 16 compute queues, still a long way short of the PS4’s 64.

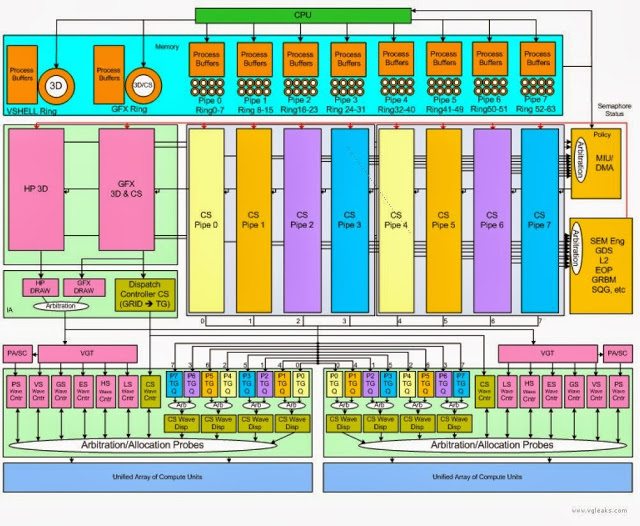

PlayStation 4 Liverpool GPU

PS4 GPU has a total of 2 rings and 64 queues on 10 pipelines

Graphics (GFX) ring and pipeline

- Same as R10xx

- Graphics and compute

- For game

High Priority Graphics (HP3D) ring and pipeline

- New for Liverpool

- Same as GFX pipeline except no compute capabilities

- For exclusive use by VShell

8 Compute-only pipelines

- Each pipeline has 8 queues of a total of 64

- Replaces the 2 compute-only queues and pipelines on R10XX

- Can be used by both game and VShell (likely assign on a pipeline basis, 1 for VShell, and 7 for game)

- Queues can be allocated by game system or by middleware type

- Allows rendering and compute loads to be processed in parallel

- Liverpool compute-only pipelines do not have Constant Update Engines (presented in R10XX cards)

Sure this is all technical as hell, but when it comes to hardware like this, biggest numbers often translate to better performance. Great news for developers, but also a good indication that the hardware no doubt has a few tricks saved up that will benefit games in the future.

Thank you Gamenmotion & VGLeaks for providing us with this information..

Images courtesy of Gamenmotion.