MSI GTX 1060 Gaming X Graphics Card Review

John Williamson / 8 years ago

Introduction

NVIDIA’s Pascal architecture marks a major shift in performance per watt and revolves around the highly efficient 16nm FinFet manufacturing process. So far, the company has unleashed products designed for higher resolutions and enthusiasts who are prepared to pay extra for a more fluid gaming experience. For example, AIB versions of the GTX 1080 in the UK can cost over £650 and have received hefty price hikes. Whether this will subside once supply increases or Sterling becomes more stable is unclear, but the current market price is unlikely to appeal to a large audience. While the GTX 1070 offers performance beyond the GTX Titan X for significantly less, it’s still too expensive for many people on a tight budget. According to AMD’s internal research, 84% of PC gamers select a graphics card within the $100-$300 price bracket.

Speaking of AMD, they recently unveiled a mainstream GPU entitled, the RX 480 which delivers a “premium VR experience”. Prior to its release, the rumour mill was in full force speculating about the performance and some AMD fans hoped it would defeat the R9 390X while retailing for a mere $200. Clearly, this never came to fruition because Polaris was always intended to target affordability and provide a good entry point for PC gaming. Those with realistic expectations admire the RX 480’s excellent price to performance ratio and believe it’s a superb product. Unfortunately, the launch has been marred by the GPU’s power exceeding PCI-E slot specifications. This led to users reporting hardware damage and they were concerned about the impact of using the RX 480, especially when paired with a cheaper motherboard. Thankfully, AMD resolved this issue in a quick fashion via a driver update and explained the situation pretty well.

Evidently, NVIDIA needed to react to the RX 480’s amazingly cheap price point and showcase their mainstream Pascal range. Originally, it seems that the GTX 1060 was designed to launch in two variants; a 6GB model initially before a 3GB budget edition at a later date. Apparently, this idea has now been shelved and NVIDIA plans to re-brand the 3GB card as the GTX 1050. In a similar vein to the GTX 1070 and GTX 1080, the GTX 1060 will arrive with the option to purchase a Founders Edition card. However, this time, it can only be procured directly from NVIDIA unless their strategy changes. From a more technical standpoint, the GTX 1060 is able to replicate the performance of the GTX 980 at a more reasonable price.

Not only that, the GTX 1060 has a low 120-watt TDP and 6GB GDDR5 memory running at an effective speed of 8012MHz. On another note, the new GPU features 10 SMs, 1280 CUDA Cores, 1506MHz base clock, 1708MHz boost, 80 Texture Units and a 192GB/s memory bandwidth. The GP106 core has a total of 48 ROPs and 4.4 billion transistors. This is much more than the previous generation GTX 960 which has 2.94 billion transistors. As you might expect, the GTX 1060 supports Pascal technologies such as Simultaneous Multi-Projection, Ansel and much more. Since the GTX 1080 and GTX 1070’s release, the reaction from developers to NVIDIA’s Simultaneous Multi-Projection has been incredibly positive and 30 games are in development which take advantage of this technology including Pool Nation VR, Everest VR, Obduction, Adr1ft, and Raw Data. Even the non-VR title Unreal Tournament is adopting SMP to enhance image quality and performance. Since the Pascal architecture is pretty well-known by now, I’m going to move onto the sample sent for review.

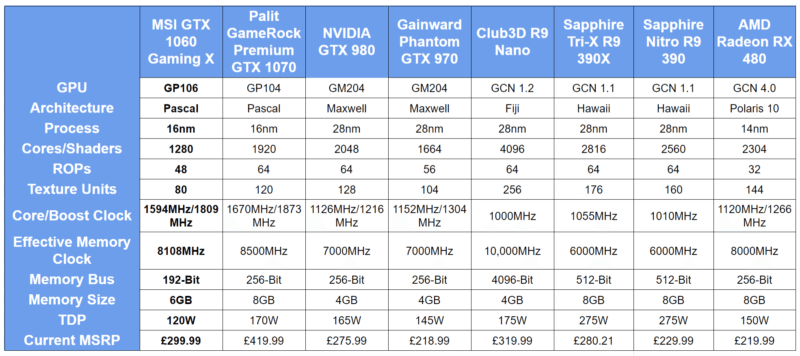

Instead of reviewing the Founders Edition on launch, we’ve decided to take a look at the MSI GTX 1060 Gaming X which has a recommended retail price of £299.99. Please note, this may change after the NDA lifts as pricing is still being finalised. MSI has increased the base clock from 1506MHz to 1594MHz and the boost clock runs at an impressive 1809MHz. Also, the memory has been set to 8108MHz and I’m expecting some good overclocking headroom given the remarkable Twin Frozr VI cooling solution. It’s important to note that the quoted specification above is when the OC profile has been enabled using MSI’s Gaming App. There’s been some controversy surrounding this notion and I’d like to disclose that MSI shipped out the card using the retail Gaming mode. However, I wanted to ascertain the maximum performance and decided to implement the OC profile. Putting that aside, I’m expecting the MSI GTX 1060 Gaming X to output impressive numbers and challenge the GTX 980 in a variety of demanding games.

Specifications

Packing and Accessories

The MSI GTX 1060 Gaming X comes in a stylish red box which contains a striking snapshot of the graphics card. This stylish theme is complimented by the NVIDIA branding and looks like the perfect combination. The packaging also displays key details about the card’s video memory and support for the latest DirectX 12 API.

On the rear, there’s information about the product’s RGB illumination, Twin Frozr VI cooler, and MSI Gaming App. As per usual with MSI’s packaging, the presentation is excellent and contributes to a stellar unboxing experience.

The graphics card is bundled with some attractive stickers, a quick user’s guide, driver/software disk and product registration leaflet.